The default DC/OS Spinnaker installation provides reasonable defaults for trying out the service, but may not be sufficient for production use. You may require a different configuration depending on the context of the deployment.

Installing with Custom Configuration

The following are some examples of how to customize the installation of your Spinnaker instance.

In each case, you would create a new Spinnaker instance using the custom configuration as follows:

dcos package install spinnaker --options=sample-spinnaker.json

We recommend that you store your custom configuration in source control.

Installing multiple instances

By default, the Spinnaker service is installed with a service name of spinnaker. You may specify a different name using a custom service configuration as follows:

{

"service": {

"name": "spinnaker-other"

}

}

When the above JSON configuration is passed to the package install spinnaker command via the --options argument, the new service will use the name specified in that JSON configuration:

dcos package install spinnaker --options=spinnaker-other.json

Multiple instances of Spinnaker may be installed into your DC/OS cluster by customizing the name of each instance. For example, you might have one instance of Spinnaker named spinnaker-staging and another named spinnaker-prod, each with its own custom configuration.

After specifying a custom name for your instance, it can be reached using dcos spinnaker CLI commands or directly over HTTP as described below.

Installing into folders

In DC/OS 1.10 and later, services may be installed into folders by specifying a slash-delimited service name. For example:

{

"service": {

"name": "/foldered/path/to/spinnaker"

}

}

The above example will install the service under a path of foldered => path => to => spinnaker. It can then be reached using dcos spinnaker CLI commands or directly over HTTP as described below.

Addressing named instances

After you’ve installed the service under a custom name or under a folder, it may be accessed from all dcos spinnaker CLI commands using the --name argument. By default, the --name value defaults to the name of the package, or spinnaker.

For example, if you had an instance named spinnaker-dev, the following command would invoke a pod list command against it:

dcos spinnaker --name=spinnaker-dev pod list

The same query would be over HTTP as follows:

curl -H "Authorization:token=$auth_token" <dcos_url>/service/spinnaker-dev/v1/pod

Likewise, if you had an instance in a folder like /foldered/path/to/spinnaker, the following command would invoke a pod list command against it:

dcos spinnaker --name=/foldered/path/to/spinnaker pod list

Similarly, it could be queried directly over HTTP as follows:

curl -H "Authorization:token=$auth_token" <dcos_url>/service/foldered/path/to/spinnaker-dev/v1/pod

You may add a -v (verbose) argument to any dcos spinnaker command to see the underlying HTTP queries that are being made. This can be a useful tool to see where the CLI is getting its information. In practice, dcos spinnaker commands are a thin wrapper around an HTTP interface provided by the DC/OS Spinnaker Service itself.

Integration with DC/OS access controls

In Enterprise DC/OS, DC/OS access controls can be used to restrict access to your service. To give a non-superuser complete access to a service, grant them the following list of permissions:

dcos:adminrouter:service:marathon full

dcos:service:marathon:marathon:<service-name> full

dcos:service:adminrouter:<service-name> full

dcos:adminrouter:ops:mesos full

dcos:adminrouter:ops:slave full

Where <service-name> is your full service name, including the folder if it is installed in one.

Service Settings

Placement Constraints

Placement constraints allow you to customize where a service is deployed in the DC/OS cluster. Placement constraints use the Marathon operators syntax. For example, [["hostname", "UNIQUE"]] ensures that at most one pod instance is deployed per agent.

A common task is to specify a list of whitelisted systems to deploy to. To achieve this, use the following syntax for the placement constraint:

[["hostname", "LIKE", "10.0.0.159|10.0.1.202|10.0.3.3"]]

Updating Placement Constraints

Clusters change, and as such so will your placement constraints. However, already running service pods will not be affected by changes in placement constraints. This is because altering a placement constraint might invalidate the current placement of a running pod, and the pod will not be relocated automatically as doing so is a destructive action. We recommend using the following procedure to update the placement constraints of a pod:

- Update the placement constraint definition in the service.

- For each affected pod, one at a time, perform a

pod replace. This will (destructively) move the pod to be in accordance with the new placement constraints.

Zones Enterprise

Requires: DC/OS 1.11 Enterprise or later.

Placement constraints can be applied to DC/OS zones by referring to the @zone key. For example, one could spread pods across a minimum of three different zones by including this constraint:

[["@zone", "GROUP_BY", "3"]]

For the @zone constraint to be applied correctly, DC/OS must have Fault Domain Awareness enabled and configured.

Virtual networks

DC/OS Spinnaker supports deployment on virtual networks on DC/OS (including the dcos overlay network), allowing each container (task) to have its own IP address and not use port resources on the agent machines. This can be specified by passing the following configuration during installation:

{

"service": {

"virtual_network_enabled": true

}

}

User

By default, all pods’ containers will be started as system user “nobody”. If your system configured for using over system user (for instance, you may have externally mounted persistent volumes with root’s permissions), you can define the user by defining a custom value for the service’s property “user”, for example:

{

"service": {

"properties": {

"user": "root"

}

}

}

Custom Install

Spinnaker configuration

- Use the following command to download Spinnaker configuration templates to get started.

curl -O https://ecosystem-repo.s3.amazonaws.com/spinnaker/artifacts/0.3.1-1.9.2/config.tgz && tar -xzf config.tgz && cd config && chmod +x gen-optionsjson

The created config folder has the following yaml templates.

front50-local.yaml

clouddriver-local.yaml

echo-local.yaml

igor-local.yaml

- Tailor these Spinnaker yaml configuration files for your specific needs. The

yamlcan be entered via the Spinnaker configuration dialogs in theDC/OS consoleor passed in anoptions.jsonfile ondcos package install.

front50-local.yaml

Front50 is the Spinnaker persistence service. The following section shows how to configure the AWS S3 (enabled=true) and GCS (enabled=false) persistence plugin in front50-local.yaml.

cassandra:

enabled: false

spinnaker:

cassandra:

enabled: false

embedded: true

s3:

enabled: true

bucket: my-spinnaker-bucket

rootFolder: front50

endpoint: http://minio.marathon.l4lb.thisdcos.directory:9000

gcs:

enabled: false

bucket: my-spinnaker-bucket

bucketLocation: us

rootFolder: front50

project: my-project

jsonPath: /mnt/mesos/sandbox/data/keys/gcp_key.json

The DC/OS Spinnaker front50 service can be configured to use secrets for AWS S3 and GCS credentials. You have to create all of them using the following commands. Configure the ones you are not using with empty content.

dcos security secrets create -v <your-aws-access-key-id> spinnaker/aws_access_key_id

dcos security secrets create -v <your-aws-secret-access-key> spinnaker/aws_secret_access_key

dcos security secrets create -v <your-gcp-key> spinnaker/gcp_key

For more configuration options see spinnaker/front50.

clouddriver-local.yaml

Clouddriver is the Spinnaker cloud provider service. The following section shows how to configure the DC/OS and Kubernetes provider plugin in clouddriver-local.yaml.

dockerRegistry:

enabled: true

accounts:

- name: my-docker-registry-account

address: https://index.docker.io/

repositories:

- library/nginx

- library/postgres

username: mesosphere

# password: ...

dcos:

enabled: true

clusters:

- name: my-dcos

dcosUrl: https://leader.mesos

insecureSkipTlsVerify: true

accounts:

- name: my-dcos-account

dockerRegistries:

- accountName: my-docker-registry-account

clusters:

- name: my-dcos

uid: bootstrapuser

password: deleteme

#kubernetes:

# enabled: true

# accounts:

# - name: my-kubernetes-account

# providerVersion: v2

# namespace:

# - default

# kubeconfigFile: ${MESOS_SANDBOX}/kubeconfig

# dockerRegistries:

# - accountName: my-docker-registry-account

For more configuration options see spinnaker/clouddriver

gate-local.yaml (optional)

Gate is the Spinnaker api service. The following section shows how to configure OAuth2 in gate-local.yaml.

#security:

# oauth2:

# client:

# clientId: ...

# clientSecret: ...

# userAuthorizationUri: https://github.com/login/oauth/authorize

# accessTokenUri: https://github.com/login/oauth/access_token

# scope: user:email

# resource:

# userInfoUri: https://api.github.com/user

# userInfoMapping:

# email: email

# firstName:

# lastName: name

# username: login

echo-local.yaml (optional)

Echo is the Spinnaker notification service. The following section shows how to configure the email notification plugin in echo-local.yaml.

mail:

enabled: false

# from: <from-gmail-address>

#spring:

# mail:

# host: smtp.gmail.com

# username: <from-gmail-address>

# password: <app-password, see https://support.google.com/accounts/answer/185833?hl=en >

# properties:

# mail:

# smtp:

# auth: true

# ssl:

# enable: true

# socketFactory:

# port: 465

# class: javax.net.ssl.SSLSocketFactory

# fallback: false

For more configuration options see spinnaker/echo, and spinnaker/spinnaker.

igor-local.yaml (optional)

Igor is the Spinnaker trigger service. The following section shows how to configure the dockerRegistry trigger plugin in igor-local.yaml.

dockerRegistry:

enabled: true

For more configuration options see spinnaker/igor.

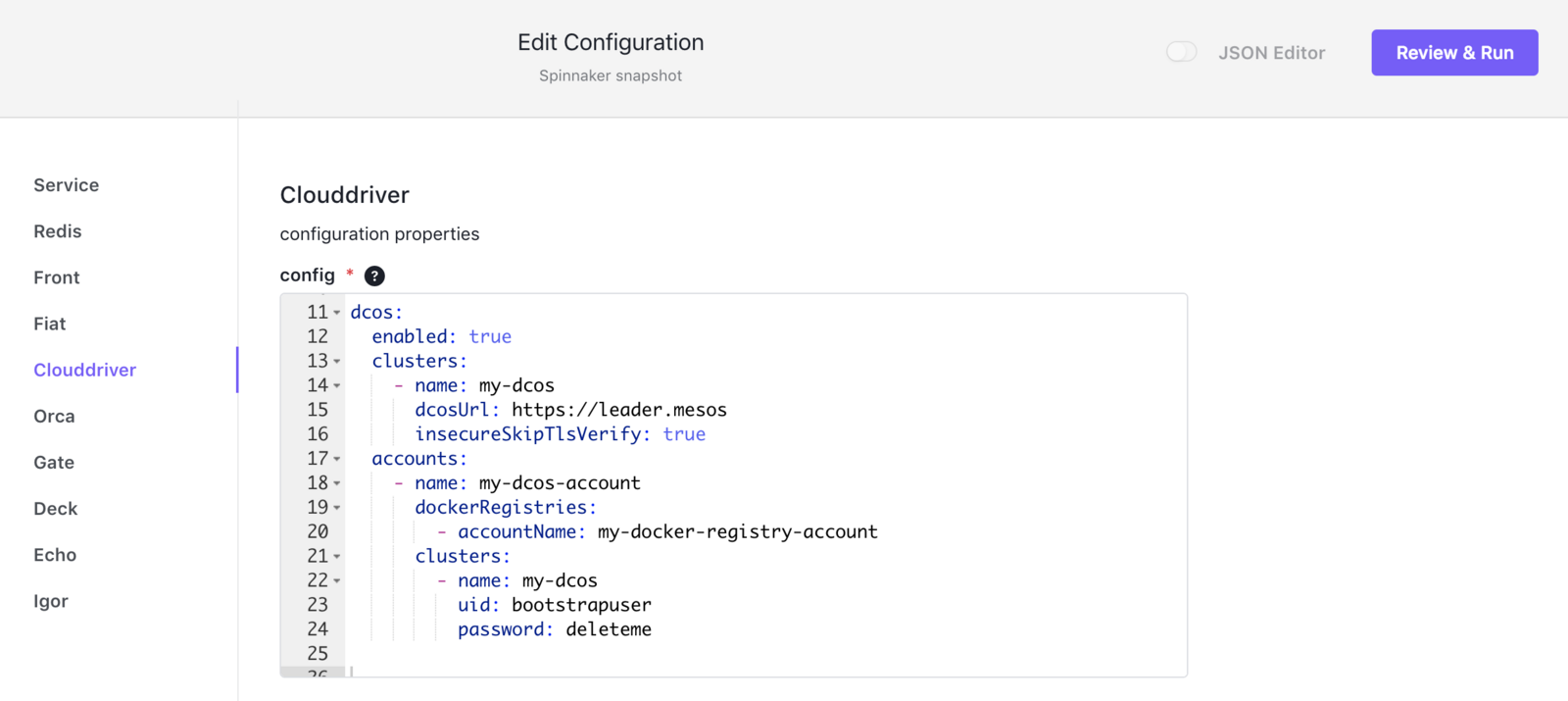

When installing the Spinnaker service via the DC/OS console you have sections for each of the Spinnaker services where you can enter the respective yaml configuration.

Here the sample for the Clouddriver service.

DC/OS CLI install

The config folder that was created when we downloaded the zip file earlier also provides a tool to generate an options.json file. Once you have edited the yaml templates to your needs, run the tool in the config folder. The proxy hostname is typically the public agent hostname.

./gen-optionsjson <proxy-hostname>

Once you have the options json file, you can install the Spinnaker service using the DC/OS cli.

dcos package install --yes spinnaker --options=options.json

Edge-LB

Instead of the simple proxy we used in the quick start, you can also use Edge-LB. After installing Edge-LB, you can create the Edge-LB pool configuration file named spinnaker-edgelb.yaml, using the following yaml (minio is also included).

apiVersion: V2

name: spinnaker

count: 1

haproxy:

frontends:

- bindPort: 9001

protocol: HTTP

linkBackend:

defaultBackend: deck

- bindPort: 8084

protocol: HTTP

linkBackend:

defaultBackend: gate

- bindPort: 9000

protocol: HTTP

linkBackend:

defaultBackend: minio

backends:

- name: deck

protocol: HTTP

services:

- endpoint:

type: ADDRESS

address: deck.spinnaker.l4lb.thisdcos.directory

port: 9001

- name: gate

protocol: HTTP

services:

- endpoint:

type: ADDRESS

address: gate.spinnaker.l4lb.thisdcos.directory

port: 8084

- name: minio

protocol: HTTP

services:

- endpoint:

type: ADDRESS

address: minio.marathon.l4lb.thisdcos.directory

port: 9000

Use the following command to launch the pool.

dcos edgelb create spinnaker-edgelb.yaml

Spinnaker Documentation

Spinnaker Documentation