Using HAProxy with Marathon-LB or Edge-LB

If you have existing instances of Marathon-LB in your DC/OS cluster, or if you are using Edge-LB (DC/OS Enterprise), you can expose the Kubernetes API for a given Kubernetes cluster via the existing HAProxy built into Marathon-LB or Edge-LB.

These options (as documented below) are slightly less secure than Option 2 from the Exposing the Kubernetes API page, because they use a self-signed TLS certificate to expose the Kubernetes API endpoint. Exposing the Kubernetes API endpoint with Marathon-LB or Edge-LB using a signed certificate is possible but not covered by the scope of this document. Exposing the Kubernetes API for multiple Kubernetes clusters is also out of the scope of this document. This is explained in detail in Exposing the Kubernetes API.

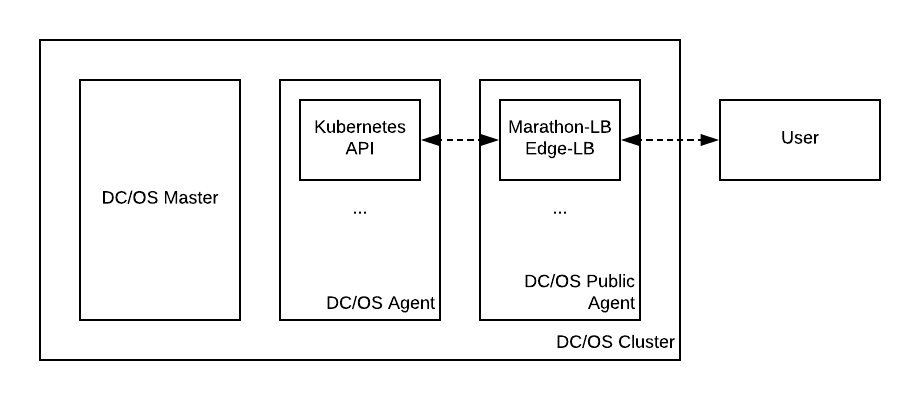

Both of these examples will generate a setup similar to the following:

Figure 1. Exposing the Kubernetes API using HAProxy

These examples assume that you want to expose the Kubernetes API for a cluster named kubernetes-cluster.

If your Kubernetes cluster has a different name, then some changes may need to be made; these are called out below.

Example 1: Using an existing Marathon-LB instance

Marathon-LB looks at all running Marathon applications and uses metadata (labels and other properties) on the application definitions to determine what applications and services to expose via HAProxy.

Specifically, a given instance of Marathon-LB will look for applications with a specific HAPROXY_GROUP label, and expose those that match the specified HAPROXY_GROUP.

The default HAPROXY_GROUP label that Marathon-LB looks for is external.

While Marathon-LB is primarily used to expose Marathon applications, it can be tricked into exposing non-Marathon endpoints with a dummy application. Here are two examples on how to achieve this:

- One with no TLS certificate verification.

- One with TLS verification between HAProxy and the Kubernetes API (but not between the user and HAProxy).

Both of these examples assume that Marathon-LB (version 1.12.1 or later) is already properly installed and configured (following the Marathon-LB installation instructions).

Marathon-LB without TLS Certificate Verification

For example, if you have a default Marathon-LB instance, you can run a Marathon application with the following definition, and it will expose the Kubernetes API:

{

"id": "/marathon-lb-kubernetes-cluster",

"instances": 1,

"cpus": 0.001,

"mem": 16,

"cmd": "tail -F /dev/null",

"container": {

"type": "MESOS"

},

"portDefinitions": [

{

"protocol": "tcp",

"port": 0

}

],

"labels": {

"HAPROXY_GROUP": "external",

"HAPROXY_0_MODE": "http",

"HAPROXY_0_PORT": "6443",

"HAPROXY_0_SSL_CERT": "/etc/ssl/cert.pem",

"HAPROXY_0_BACKEND_SERVER_OPTIONS": " timeout connect 10s\n timeout client 86400s\n timeout server 86400s\n timeout tunnel 86400s\n server kubernetescluster apiserver.kubernetes-cluster.l4lb.thisdcos.directory:6443 ssl verify none\n"

}

}

If your Kubernetes cluster is called something different from kubernetes-cluster, then apiserver.kubernetes-cluster.l4lb.thisdcos.directory:6443 should be modified to match the cluster’s name.

For example, if your Kubernetes cluster is called dev/kubernetes01, then you must replace

apiserver.kubernetes-cluster.l4lb.thisdcos.directory:6443

with

apiserver.devkubernetes01.l4lb.thisdcos.directory:6443

Notice how the slash in dev/kubernetes01 has been removed from the cluster’s name to form the hostname.

This application could be added to DC/OS either through the DC/OS web interface, the Marathon API, or via the dcos command line with this (assuming the JSON is saved as the file kubectl-proxy.json)

dcos marathon app add kubectl-proxy.json

Here is how this works:

- Marathon-LB identifies that the application

marathon-lb-kubernetes-clusterhas theHAPROXY_GROUPlabel set toexternal(change this if you’re using a differentHAPROXY_GROUPfor your Marathon-LB configuration). - The

instances,cpus,mem,cmd, andcontainerfields create a dummy container that takes up minimal space and performs no operation. - The single port indicates that this application has one “port” (this information is used by Marathon-LB).

"HAPROXY_0_MODE": "http"indicates to Marathon-LB that the frontend and backend configuration for this particular service should be configured withhttp."HAPROXY_0_PORT": "6443"tells Marathon-LB to expose the service on port 6443 (rather than the randomly-generated service port, which is ignored)."HAPROXY_0_SSL_CERT": "/etc/ssl/cert.pem"tells Marathon-LB to expose the service with the self-signed Marathon-LB certificate (which has no CN).- The last label

HAPROXY_0_BACKEND_SERVER_OPTIONSindicates that Marathon-LB should forward traffic to the endpointapiserver.kubernetes-cluster.l4lb.thisdcos.directory:6443rather than to the dummy application, and that the connection should be made using TLS without verification. Also, large timeout values are used so that calls such askubectl logs -fandkubectl exec -i -tdo not terminate abruptly after a short period.

Marathon-LB with TLS Certificate Verification between HAProxy and the Kubernetes API

Alternatively, if you are using DC/OS Enterprise, you can modify the dummy application with this so that it will verify the connection between HAProxy and the target Kubernetes API; this will still have an invalid, self-signed certificate for external clients:

{

"id": "/marathon-lb-kubernetes-cluster",

"instances": 1,

"cpus": 0.001,

"mem": 16,

"cmd": "tail -F /dev/null",

"container": {

"type": "MESOS"

},

"portDefinitions": [

{

"protocol": "tcp",

"port": 0

}

],

"labels": {

"HAPROXY_GROUP": "external",

"HAPROXY_0_MODE": "http",

"HAPROXY_0_PORT": "6443",

"HAPROXY_0_SSL_CERT": "/etc/ssl/cert.pem",

"HAPROXY_0_BACKEND_SERVER_OPTIONS": " timeout connect 10s\n timeout client 86400s\n timeout server 86400s\n timeout tunnel 86400s\n server kubernetescluster apiserver.kubernetes-cluster.l4lb.thisdcos.directory:6443 ssl verify required ca-file /mnt/mesos/sandbox/.ssl/ca-bundle.crt\n"

}

}

If your Kubernetes cluster is called something different from kubernetes-cluster, then apiserver.kubernetes-cluster.l4lb.thisdcos.directory:6443 should be modified to match the cluster’s name.

For example, if your Kubernetes cluster is called dev/kubernetes01, then you must replace

apiserver.kubernetes-cluster.l4lb.thisdcos.directory:6443

with

apiserver.devkubernetes01.l4lb.thisdcos.directory:6443

Notice how the slash in dev/kubernetes01 has been removed from the cluster’s name to form the hostname.

Again, this application could be added to DC/OS either through the DC/OS web interface, the Marathon API, or via the dcos command line with this (assuming the JSON is saved as the file kubectl-proxy.json)

dcos marathon app add kubectl-proxy.json

Example 2: Auto exposure via Edge-LB >= 1.5.0

The cluster’s control plane tasks are launched with labels that will trigger an Auto Pool to start.

By default the cluster’s Service Name (for example dev/kubernetes01) will be used as the path prefix. To find the endpoint run

dcos edgelb endpoints auto-default

NAME PORT INTERNAL IPS EXTERNAL IPS

frontend_0.0.0.0_6443 6443 172.16.7.60 54.184.41.74

stats 9090 172.16.7.60 54.184.41.74

Public/private IPs metadata is inaccurate in case of pools that use virtual networks.

Assuming a cluster Service Name of dev/kubernetes01 it can be configured via

dcos kubernetes cluster kubeconfig --cluster-name=dev/kubernetes01 --activate-context --apiserver-url=https://54.184.41.74:6443/dev/kubernetes01 --context-name=dev/kubernetes01 --force-overwrite --insecure-skip-tls-verify

Various options can be set when launching the cluster to control the labels and exposure settings.

| option | default value | description |

|---|---|---|

kubernetes.apiserver_edgelb.expose |

true |

enable or disable exposure for just this cluster |

kubernetes.apiserver_edgelb.template |

default |

the pool-template to expose with |

kubernetes.apiserver_edgelb.certificate |

$AUTOCERT |

the secret name of a certificate or $AUTOCERT |

kubernetes.apiserver_edgelb.port |

6443 |

the frontend port to use |

kubernetes.apiserver_edgelb.path |

/{{service.name}} |

the path to use on the frontend |

Example 3: Creating an Edge-LB Pool

If, instead of using Marathon-LB, you are using Edge-LB in your DC/OS cluster, you can create an Edge-LB pool to expose the target Kubernetes API to clients.

This example does not validate certificates either between Kubernetes clients and HAProxy or between HAProxy and the Kubernetes API. These validations are achievable but are outside the scope of this document.

{

"apiVersion": "V2",

"name": "edgelb-kubernetes-cluster",

"count": 1,

"autoCertificate": true,

"haproxy": {

"frontends": [{

"bindPort": 6443,

"protocol": "HTTPS",

"certificates": [

"$AUTOCERT"

],

"linkBackend": {

"defaultBackend": "kubernetes-cluster"

}

}],

"backends": [{

"name": "kubernetes-cluster",

"protocol": "HTTPS",

"services": [{

"mesos": {

"frameworkName": "kubernetes-cluster",

"taskNamePattern": "kube-control-plane"

},

"endpoint": {

"portName": "apiserver"

}

}]

}],

"stats": {

"bindPort": 6090

}

}

}

If your Kubernetes cluster is called something different from kubernetes-cluster, then the frameworkName should be modified to match the cluster’s name.

For example, if your Kubernetes service is located at dev/kubernetes01, then replace "frameworkName": "kubernetes-cluster" with "frameworkName": "dev/kubernetes01".

This example assumes that Edge-LB (version 1.0.3 or later) is already properly installed and configured (following the Edge-LB installation instructions):

-

Create a

edgelb-kubernetes-cluster-pool.jsonfile with the above contents. -

Create the Edge-LB pool with the following command:

dcos edgelb create edgelb-kubernetes-cluster-pool.json

This creates an Edge-LB pool with the following configuration:

- One (1) instance of HAProxy, running on a public DC/OS agent node.

- Exposes port 6443 with TLS and a self-signed certificate.

- Forwards connections on port 6443 to the Mesos task matching framework name

kubernetes-clusterand task name matching regexkube-control-plane, on the port labeledapiserver. - Listens on port 6090 for the HAProxy stats endpoint.

Note that this will, by default, expose this port externally;

if you do not want this exposed externally, you could add a

"bindAddress":"127.0.0.1"within thestatsblock.

Kubernetes Documentation

Kubernetes Documentation