With DC/OS, you can configure Mesos Mount disk resources across your cluster by mounting storage resources on agents using a well-known path.

When a DC/OS agent starts, it scans for volumes that match the pattern /dcos/volume<N>, where <N> is an integer. The agent is then automatically configured to offer these disk resources to other services.

Example using loopback device

In this example, a disk resource is added to a DC/OS agent post-install on a running cluster. These same steps can be used pre-install without having to stop services or clear the agent state.

Please note that this example handles adding resources exclusively and can not get applied the same way when removing resources.

-

Connect to an agent in the cluster with SSH.

-

Examine the current agent resource state.

cat /var/lib/dcos/mesos-resources # Generated by make_disk_resources.py on 2016-05-05 17:04:29.868595 # MESOS_RESOURCES='[{"ranges": {"range": [{"end": 21, "begin": 1}, {"end": 5050, "begin": 23}, {"end": 32000, "begin": 5052}]}, "type": "RANGES", "name": "ports"}, {"role": "*", "type": "SCALAR", "name": "disk", "scalar": {"value": 47540}}]'Note there are no references yet for

/dcos/volume0. -

Stop the agent.

-

Clear agent state.

-

Cache volume mount discovery resource state with this command:

sudo mv -f /var/lib/dcos/mesos-resources /var/lib/dcos/mesos-resources.cacheDC/OS will check this file later to generate a new resource state for the agent.

-

Remove agent checkpoint state with this command:

sudo rm -f /var/lib/mesos/slave/meta/slaves/latest

-

-

Create a 200 MB loopback device.

sudo mkdir -p /dcos/volume0 sudo dd if=/dev/zero of=/root/volume0.img bs=1M count=200 sudo losetup /dev/loop0 /root/volume0.img sudo mkfs -t ext4 /dev/loop0 sudo losetup -d /dev/loop0This is suitable for testing purposes only. Mount volumes must have at least 200 MB of free space available. 100 MB on each volume is reserved by DC/OS and is not available for other services.

-

Create

fstabentry and mount.Ensure the volume is mounted automatically at boot time. Something similar could also be done with a

systemdmount unit.echo "/root/volume0.img /dcos/volume0 auto loop 0 2" | sudo tee -a /etc/fstab sudo mount /dcos/volume0 -

Reboot.

sudo reboot -

SSH to the agent and review the

journaldlogs for references to the new volume/dcos/volume0.journalctl -b | grep '/dcos/volume0'In particular, there should be an entry for the agent starting up and the new volume0

Mountdisk resource:May 05 19:18:40 dcos-agent-public-01234567000001 systemd[1]: Mounting /dcos/volume0... May 05 19:18:42 dcos-agent-public-01234567000001 systemd[1]: Mounted /dcos/volume0. May 05 19:18:46 dcos-agent-public-01234567000001 make_disk_resources.py[888]: Found matching mounts : [('/dcos/volume0', 74)] May 05 19:18:46 dcos-agent-public-01234567000001 make_disk_resources.py[888]: Generated disk resources map: [{'name': 'disk', 'type': 'SCALAR', 'disk': {'source': {'mount': {'root': '/dcos/volume0'}, 'type': 'MOUNT'}}, 'role': '*', 'scalar': {'value': 74}}, {'name': 'disk', 'type': 'SCALAR', 'role': '*', 'scalar': {'value': 47540}}] May 05 19:18:58 dcos-agent-public-01234567000001 mesos-slave[1891]: " --oversubscribed_resources_interval="15secs" --perf_duration="10secs" --perf_interval="1mins" --port="5051" --qos_correction_interval_min="0ns" --quiet="false" --recover="reconnect" --recovery_timeout="15mins" --registration_backoff_factor="1secs" --resources="[{"name": "ports", "type": "RANGES", "ranges": {"range": [{"end": 21, "begin": 1}, {"end": 5050, "begin": 23}, {"end": 32000, "begin": 5052}]}}, {"name": "disk", "type": "SCALAR", "disk": {"source": {"mount": {"root": "/dcos/volume0"}, "type": ""}}, "role": "*", "scalar": {"value": 74}}, {"name": "disk", "type": "SCALAR", "role": "*", "scalar": {"value": 47540}}]" --revocable_cpu_low_priority="true" --sandbox_directory="/mnt/mesos/sandbox" --slave_subsystems="cpu,memory" --strict="true" --switch_user="true" --systemd_enable_support="true" --systemd_runtime_directory="/run/systemd/system" --version="false" --work_dir="/var/lib/mesos/slave"

Example using a mount volume within a Marathon app

{

"id": "/mount-test-svc1",

"instances": 1,

"cpus": 0.1,

"mem": 128,

"networks": [

{

"mode": "container/bridge"

}

],

"disk": 0,

"gpus": 0,

"backoffSeconds": 1,

"backoffFactor": 1.15,

"maxLaunchDelaySeconds": 300,

"container": {

"type": "DOCKER",

"volumes": [

{

"persistent": {

"size": 25,

"type": "mount"

},

"mode": "RW",

"containerPath": "volume0"

}

],

"docker": {

"image": "nginx",

"privileged": false,

"forcePullImage": false

},

"portMappings": [

{

"containerPort": 80,

"hostPort": 0,

"servicePort": 10101,

"protocol": "tcp",

"name": "httpport",

"labels": {

"VIP_0": "/mount-test-svc1:80"

}

}

]

},

"healthChecks": [

{

"gracePeriodSeconds": 300,

"intervalSeconds": 60,

"timeoutSeconds": 20,

"maxConsecutiveFailures": 3,

"portIndex": 0,

"path": "/",

"protocol": "MESOS_HTTP",

"delaySeconds": 15

}

],

"upgradeStrategy": {

"minimumHealthCapacity": 0.5,

"maximumOverCapacity": 0

},

"unreachableStrategy": "disabled",

"killSelection": "YOUNGEST_FIRST",

"requirePorts": true,

"labels": {

"HAPROXY_GROUP": "external"

}

}

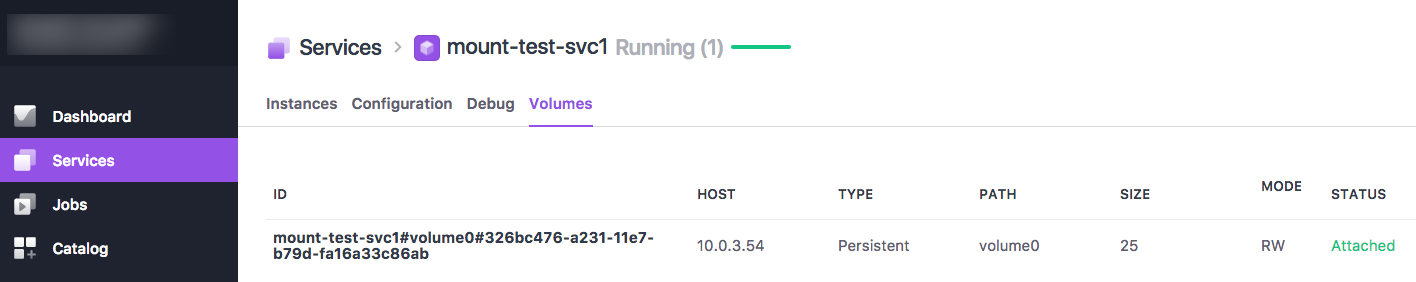

After running this service, navigate to the Services > Volumes tab in the web interface:

Figure 1. Services > Volumes tab

Cloud provider resources

Cloud provider storage services are typically used to back DC/OS mount volumes. This reference material can be useful when designing a production DC/OS deployment:

- Amazon: EBS

- Azure: About disks and VHDs for Azure virtual machines

- Azure: Introduction to Microsoft Azure storage

Best practices

Mount disk resources are primarily for stateful services like Kafka and Cassandra which can benefit from having dedicated storage available throughout the cluster. Any service that uses a Mount disk resource has exclusive access to the reserved resource. However, it is still important to consider the performance and reliability requirements for the service. The performance of a Mount disk resource is based on the characteristic of the underlying storage and DC/OS does not provide any data replication services. Consider the following:

- Use

Mountdisk resources with stateful services that have strict storage requirements. - Carefully consider the filesystem type, storage media (network attached, SSD, etc.), and volume characteristics (RAID levels, sizing, etc.) based on the storage needs and requirements of the stateful service.

- Label Mesos agents using a Mesos attribute that reflects the characteristics of the agent’s disk mounts, e.g. IOPS200, RAID1, etc.

- Associate stateful services with storage agents using Mesos Attribute constraints.

- Consider isolating demanding storage services to dedicated storage agents, since the filesystem page cache is a host-level shared resource.

- Ensure that all services using

Mountdisk resources are designed to handle the permanent loss of one or moreMountdisk resources. Services are still responsible for managing data replication and retention, graceful recovery from failed agents, and backups of critical service state.

DC/OS Documentation

DC/OS Documentation