Mesosphere® DC/OS™ cluster nodes generate logs that contain diagnostic and status information for DC/OS core components and DC/OS services. DC/OS comes with a built-in log pipeline which can transmit all kinds of logs to an aggregated log database.

Service, task, and node logs

The logging component provides an HTTP API /system/v1/logs/ that exposes the system logs. You can access information about DC/OS scheduler services, like Marathon™ or Kafka®, with the following CLI command:

dcos service log --follow <scheduler-service-name>

You can access DC/OS task logs by running this CLI command:

dcos task log --follow <service-name>

You access the logs for the master node with the following CLI command:

dcos node log --leader

To access the logs for an agent node, run dcos node to get the Apache® Mesos® IDs of your nodes, then run the following CLI command:

dcos node log --mesos-id=<node-id>

You can download all the log files for your service from the Services > Services tab in the DC/OS web interface. You can also monitor stdout/stderr.

For more information, see the Service and Task Logs quick start guide.

.System logs

DC/OS components use systemd-journald to store their logs. To access the DC/OS core component logs, SSH into a node and run this command to see all logs:

journalctl -u "dcos-*" -b

You can view the logs for specific components by entering the component name. For example, to access Admin Router logs, run this command:

journalctl -u dcos-nginx -b

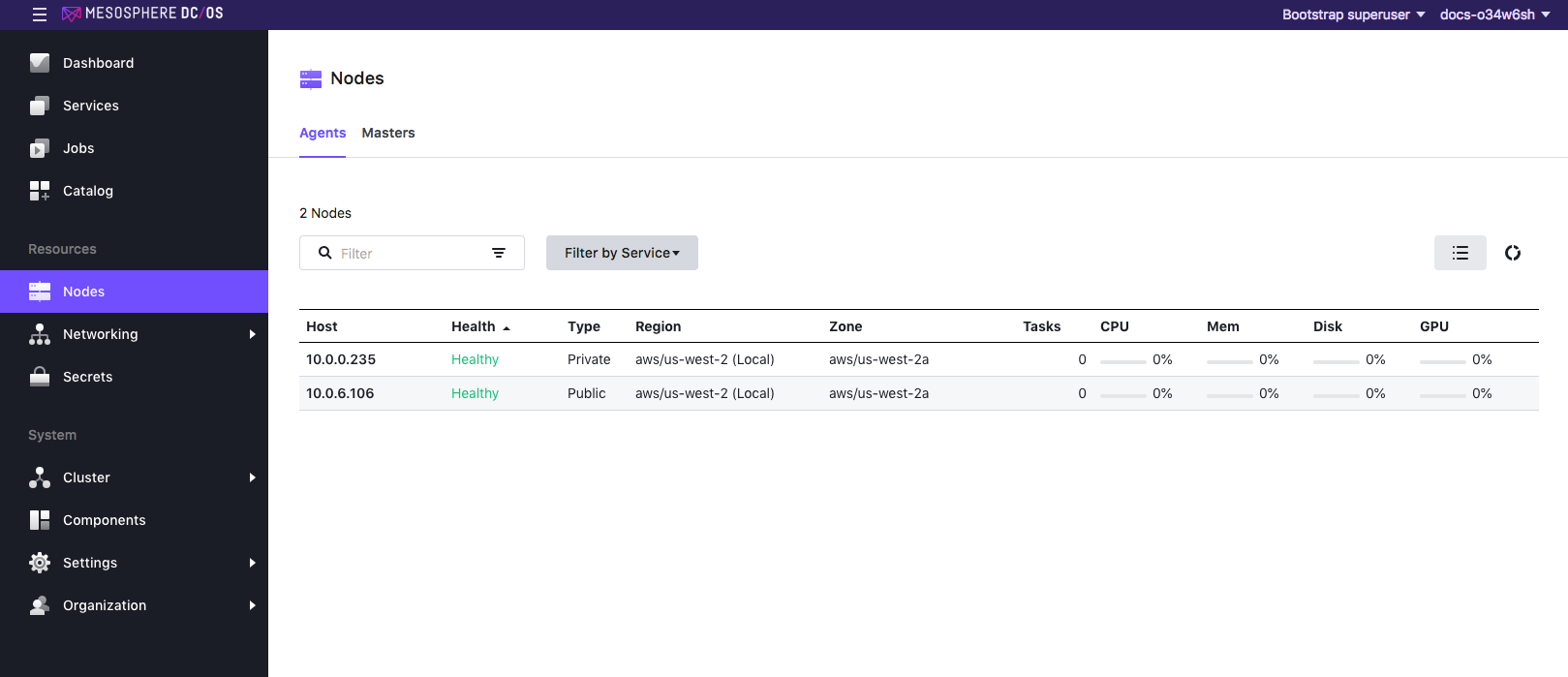

You can find which components are unhealthy in the DC/OS web interface Nodes tab.

Figure 1. System health log showing nodes

Log aggregation

Streaming logs from machines in your cluster is not always the best solution for examining events and debugging issues. Currently we suggest one of these options for log aggregation:

Logging Quick Start

Getting started with DC/OS logging…Read More

Accessing system and component logs

ENTERPRISE

Managing user access to system and component logs…Read More

Configuring Task Log Output and Retention

Task environment variables that influence logging…Read More

Controlling Access to Task Logs

ENTERPRISE

Managing user access to task logs using Marathon groups…Read More

Log Aggregation

Aggregating system logs with ELK and Splunk…Read More

Logging Reference

Using the Logging API…Read More

Logging API Examples

Examples for the Logging API…Read More

DC/OS Documentation

DC/OS Documentation